This is a work in progress. You can see the assets I'm generating, as if it were an animation project.

1. First, I created the script

2. Then the shot breakdown to determine which assets, sets, and characters I need to create.

3. I've made directing and art direction decisions, establishing the pacing, tone, aesthetic, and lighting for each scene.

4. I've defined the AI models and workflows I'll use in each case and adapted the prompts based on the AI models I'll be using.

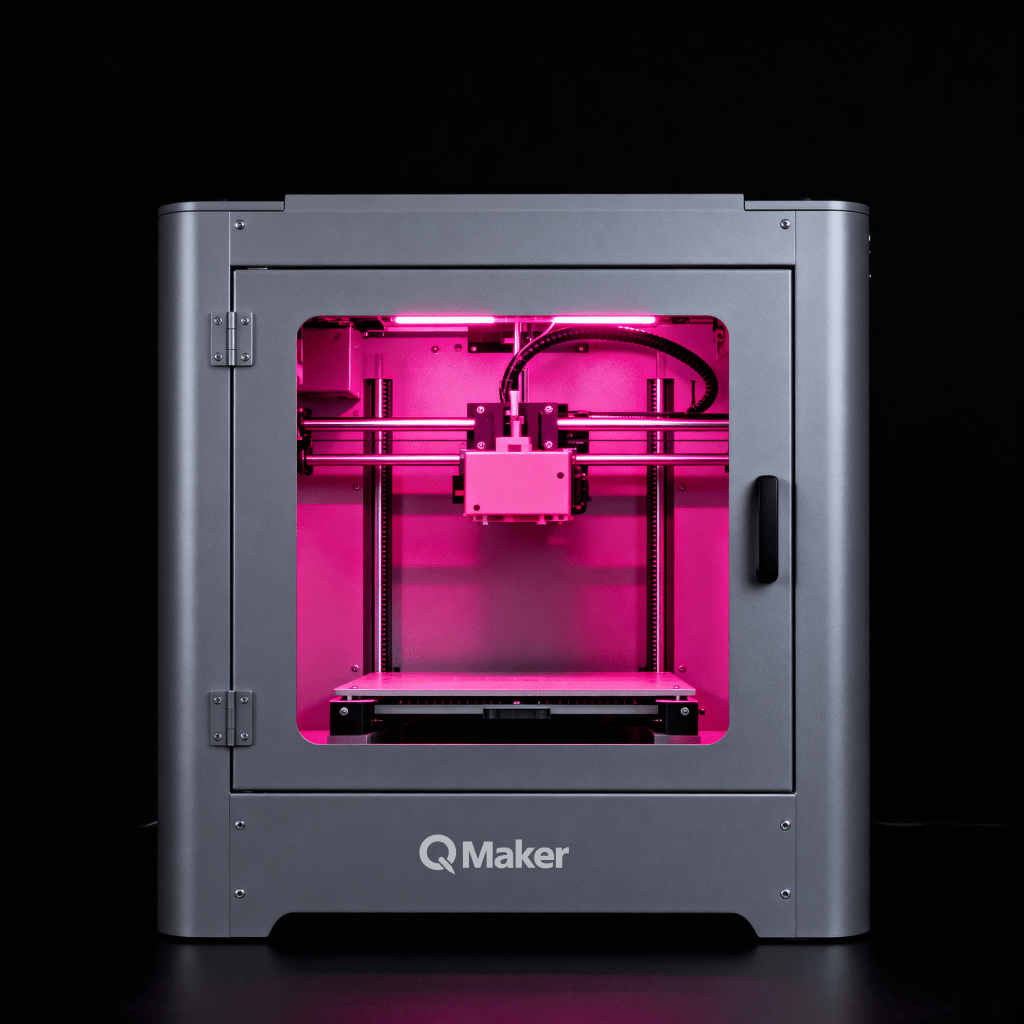

5. Then, I created several LORAs for the characters/objects that need to be consistent across shots, including Ama, the band's singer.

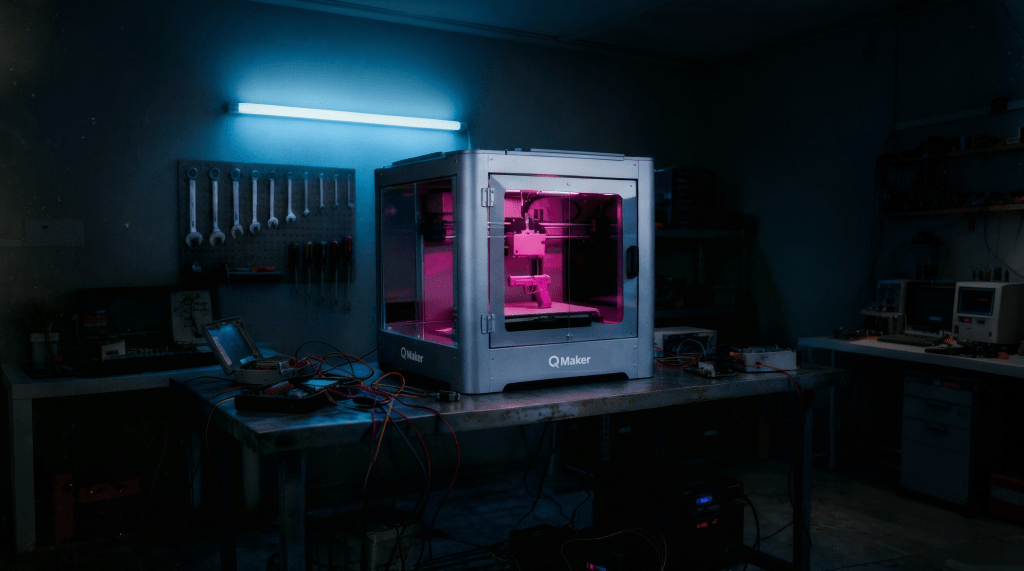

6. Next, I designed the sets for the different shots using generative AI and Photoshop, and I also designed the main characters as if it were an animation project.

7. Then, I'm going to combine sets with characters to create the basic static shots.

8. Next, I'll make an animatic with all the still frames and the music to find the rhythm of the video and see if something doesn't work well. This way, I can replace it before I generate the videos, which is too expensive in terms of time and money.

9. I'll animate all the shots with various generative AIs, leveraging the strengths of each.

10. Then I'll post-produce the shots,

Here’s one of the working documents I created to set the art direction. Once the project is finished, I’ll share the script, the shot breakdown, and a detailed scene-by-scene workflow.